Human In the Loop

Overview

Human-in-the-Loop (HITL) is a critical design pattern in agent development that integrates human judgment and expertise into the workflow of AI systems. In the context of the Arcanna's Agents Workflows, HITL enables agents to collaborate with humans, ensuring reliability, accuracy, and accountability, particularly for sensitive or complex tasks. While AI agents are designed to perform tasks autonomously, HITL provides mechanisms for human intervention at key decision points. This is essential for scenarios where AI confidence is low, ethical considerations are present, or real-world nuances require human interpretation. By incorporating HITL, agents can leverage the efficiency of automation while benefiting from the superior contextual understanding and judgment of human operators.

Key Aspects of HITL in Arcanna's Agents

HITL is often implemented to manage specific aspects of agent operations, including:

Approval of Sensitive Actions

Agents can be configured to pause and seek explicit human approval before executing actions that have significant consequences.

Handling Ambiguity and Low Confidence

When an agent encounters a situation it cannot confidently resolve, it can escalate the task to a human for clarification or decision-making.

Iterative Feedback Loops

Humans can provide feedback and corrections to the agent's outputs, which can be used to refine the agent's behavior and improve future performance.

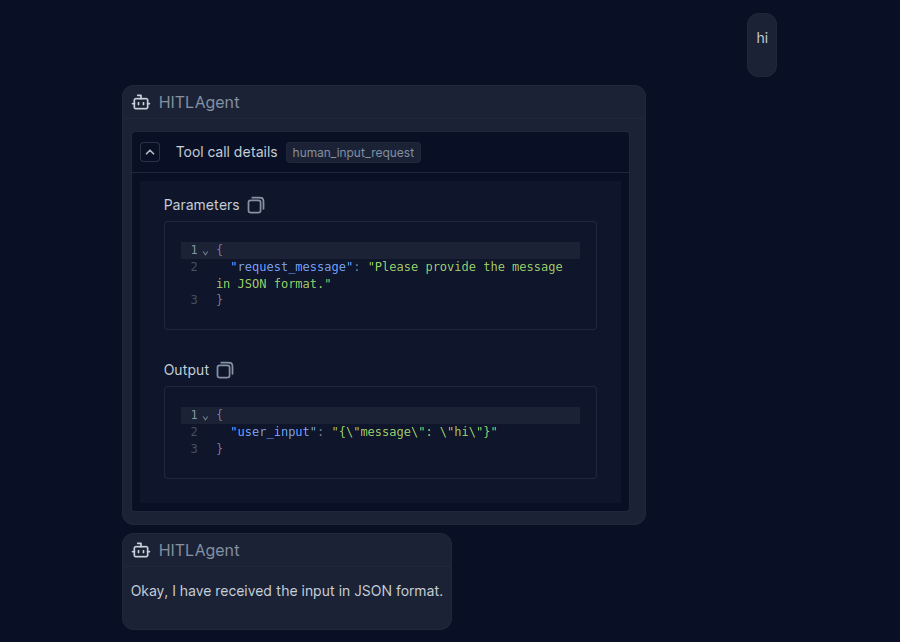

Input Validation and Clarification

When an agent receives input that doesn't match its expected format, structure, or requirements, it can request clarification from a human operator rather than proceeding with potentially incorrect assumptions. This prevents wasted processing time and ensures the agent operates on accurate, well-formed data from the start of the workflow.

How To Use

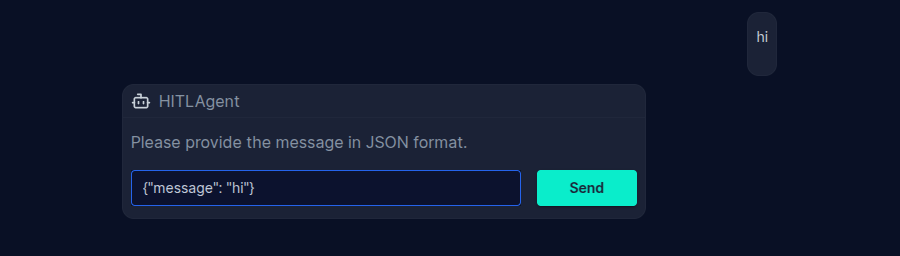

Any agent can have access to HITL, all you need to do is provide the agents you want to use HITL, the human_input_request_tool tool.

import datetime

import os

from zoneinfo import ZoneInfo

from google.adk.agents import Agent

from google.adk.models.lite_llm import LiteLlm

root_agent = Agent(

name="HITLAgent",

model=LiteLlm(model="gemini/gemini-2.0-flash"),

description=(

"Agent that validates a JSON input"

),

instruction=(

"Your are an agent that has to check if the provided message is in JSON format. If the user message is not in JSON format you must invoke human_input_request tool and ask the user to provide a message in JSON format."

),

tools=[human_input_request_tool],

)

A more practical example

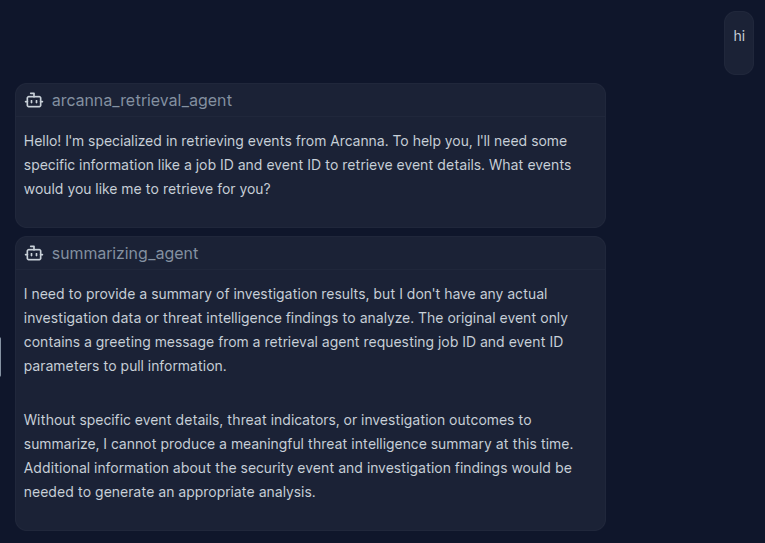

Consider a sequence of agents in a workflow:

SequentialAgent(name="parent_agent",

sub_agents=[arcanna_retrieval_agent, summarizing_agent]

Without HITL tool.

In this scenario, the workflow continues execution without the necessary input to perform meaningful work. This results in wasted tokens and credits with your LLM provider.

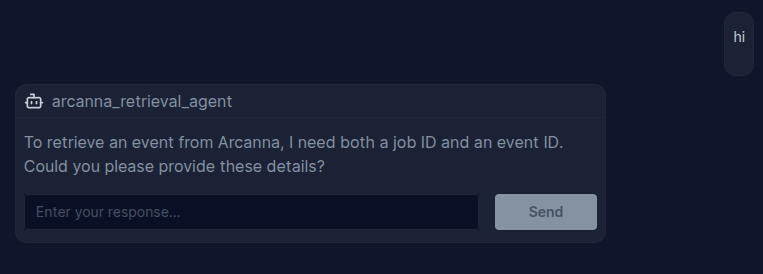

With HITL tool.

In this scenario, workflow execution is paused pending human input. Once the retrieval agent receives the required data, the workflow resumes automatically.