AI Assistant Setup

By integrating with MCP (Model Context Protocol) and using different LLM providers as its backbone, the AI assistant helps users with their investigations.

The AI Assistant supports all MCP servers, including remote instances, by implementing Streamable HTTP and Server-Sent Event (SSE) server functionality.

For these functionalities to work, Arcanna needs to have internet access to the LLM providers and the MCP servers.

Setup

- Click on

Assistanttab to access the AI Assistant. - Click on

Open MCP Tools Menuto configure the AI Assistant.

Configuration Tabs Overview

The MCP Tools Menu provides four main configuration tabs:

- Overview

- MCP Config

- Assistant Config

- Variables

The Overview tab provides a comprehensive view of your AI Assistant configuration:

- Shows all configured MCP servers and their status

- Displays available tools from each MCP server

- Provides a quick health check of your setup

- Allows users to enable/disable tools or servers for their current conversation

The MCP Config tab is where you configure MCP (Model Context Protocol) servers:

- Add and configure MCP servers (Elasticsearch, Splunk, VirusTotal, etc.)

- Set environment variables for each MCP server

- Configure remote MCP servers with SSE support

The Assistant Config tab handles LLM provider settings:

- Configure cloud LLM providers (OpenAI, Anthropic, Bedrock)

- Set up local LLM providers (Ollama)

- Define system prompts and behavior guidelines

- Adjust model parameters (max tokens, context window)

The Variables tab manages environment and configuration variables:

- Set global environment variables

- Configure API keys and credentials

- Define reusable configuration parameters

Assistant Config

LLM Providers

We support both cloud and local-hosted LLM providers.

- Anthropic

- Azure OpenAI

- Bedrock

- Gemini

- Ollama

- OpenAI

{

"anthropic": {

"api_key": "API_KEY"

},

"sdk": "anthropic",

"model": "LLM_MODEL"

}

{

"openai": {

"endpoint": "AZURE_OPENAI_API",

"api_key": "API_KEY",

"api_version": "API_VERSION (defaults to '2025-01-01-preview')",

},

"sdk": "openai",

"model": "LLM_MODEL"

}

{

"bedrock": {

"aws_access_key": "AWS_ACCESS_KEY",

"aws_secret_key": "AWS_SECRET_KEY",

"aws_region": "AWS_REGION"

},

"sdk": "bedrock",

"model": "LLM_MODEL"

}

{

"gemini": {

"api_key": "GEMINI_API_KEY"

},

"sdk": "gemini",

"model": "GEMINI_MODEL"

}

For GEMINI_MODEL all models released by Google are supported (e.g.: gemini-2.0-flash, gemini-2.5-pro, gemini-2.5-flash-preview-04-17).

{

"ollama": {

"endpoint": "OLLAMA_API"

},

"sdk": "ollama",

"model": "LLM_MODEL"

}

{

"openai": {

"endpoint": "OPENAI_API (defaults to https://api.openai.com/v1)",

"api_key": "API_KEY"

},

"sdk": "openai",

"model": "LLM_MODEL"

}

Additional Configuration Options

All LLM providers support the following optional configuration parameters that can be added to any provider configuration:

System Prompt (system_prompt):

- Allows users to provide a set of initial instructions, context, rules, and guidelines to an AI assistant before it begins interacting or processing user requests. It acts as a foundational configuration or a "meta-instruction" that fundamentally shapes the AI's behavior, persona, operational boundaries, and interaction style.

- The system prompt's capability to shape assistant behavior, combined with MCP Tool Approval mechanism (By default, before executing any tool the user is asked to approve the tool), acts as a robust guardrail against misuse.

- The AI Assistant comes with an internal system prompt. Add this parameter in config to provide additional instructions and tailor its behavior to your specific needs.

Max Tokens (max_tokens):

- Controls the maximum number of tokens the AI assistant can generate in a single response.

- Helps manage response length and API costs.

- It is specified in the provider block (E.g.: "openai", "bedrock", "gemini").

- If not specified, uses the provider's default limit.

Context Window Percentage (context_window_percentage):

- Sets a threshold for the conversation context window.

- If the conversation context window exceeds the threshold, the user will be alerted and starting a new conversation is recommended.

- Value should be between 0 and 1.

Arcanna Platform prompt (enabled_platform_prompt)

- There is an option to have a platform prompt enabled for each conversation. This platform prompt describes better what is arcanna and what it is used for. It has a length of approximatively 10 thousand characters.

- Using it will provide better context to the LLM. However if you are using a smaller model it should remain disabled (as it is by default).

- Valid values are false/true (defaults to false).

Disabled tool calling (disable_tool_calling)

- Some smaller models don't handle tool calling so well. Others have limited support by design.

- Valid values are false/true (defaults to false).

Example with additional options:

{

"openai": {

"endpoint": "https://api.openai.com/v1",

"api_key": "API_KEY",

"max_tokens": 4000

},

"sdk": "openai",

"model": "gpt-4",

"system_prompt": "You are a helpful cybersecurity assistant.",

"context_window_percentage": 0.9,

"enabled_platform_prompt": false,

"dsable_tool_calling": false

}

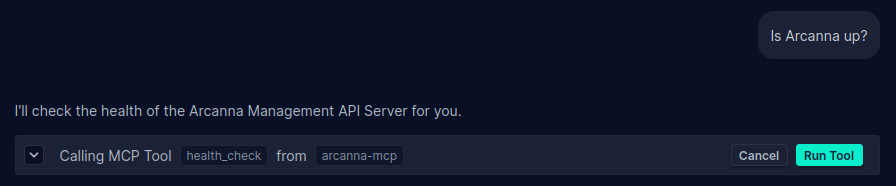

MCP Tool Approval mechanism

- By default, before executing any tool the user is asked to approve the tool.

- To bypass the tool approval mechanism and automatically execute all tools, click the flash icon from the message input area. Disabling can be done the same way, by clicking the flash icon one more time.

MCP Config

The AI Assistant comes with several pre-installed MCP servers. To use them, simply add the configuration for each server you want to enable in your JSON config.

Available Pre-installed MCP Servers:

| MCP Server | Description | Repository/URL |

|---|---|---|

| Arcanna | Access Arcanna platform functionality | Internal Documentation |

| Arcanna Input | Input data management for Arcanna | Internal Documentation |

| Elasticsearch | Query and search Elasticsearch indices | elasticsearch-mcp-server |

| Splunk | Search and analyze Splunk data | splunk-mcp-server |

| VirusTotal | Scan files and URLs for malware | @burtthecoder/mcp-virustotal |

| Shodan | Search for internet-connected devices | @burtthecoder/mcp-shodan |

| Slack | Send messages and manage Slack workspaces | @modelcontextprotocol/server-slack |

| OpenAI | Integration with OpenAI API | @mzxrai/mcp-openai |

| Sequential Thinking | Structured problem-solving and reasoning | @modelcontextprotocol/server-sequential-thinking |

How to Configure MCP Servers:

Below are configuration examples for each MCP server. Add only the servers you need to your MCP JSON config:

- Elasticsearch

- VirusTotal

- Shodan

- Arcanna

- Splunk

- Arcanna Input

- Slack

- OpenAI MCP

- Sequential Thinking

{

"elasticsearch-mcp-server": {

"command": "/opt/venv/bin/elasticsearch-mcp-server",

"args": [],

"env": {

"ELASTICSEARCH_HOSTS": "https://your-elasticsearch-host:port",

"ELASTICSEARCH_USERNAME": "your-username",

"ELASTICSEARCH_PASSWORD": "your-password"

}

}

}

{

"virustotal-mcp": {

"command": "/usr/local/bin/mcp-virustotal",

"args": [],

"env": {

"VIRUSTOTAL_API_KEY": "your-virustotal-api-key"

}

}

}

{

"shodan-mcp": {

"command": "/usr/local/bin/mcp-shodan",

"args": [],

"env": {

"SHODAN_API_KEY": "your-shodan-api-key"

}

}

}

{

"arcanna-mcp": {

"command": "/opt/venv/bin/arcanna-mcp-server",

"args": [],

"env": {

"ARCANNA_MANAGEMENT_API_KEY": "your-arcanna-api-key",

"ARCANNA_HOST": "your-arcanna-host"

}

}

}

{

"splunk-mcp-server": {

"command": "/root/.local/bin/poetry",

"args": [

"--directory",

"/app/splunk_mcp/",

"run",

"python",

"splunk_mcp.py",

"stdio"

],

"env": {

"SPLUNK_HOST": "your-splunk-host",

"SPLUNK_PORT": "8089",

"SPLUNK_USERNAME": "your-username",

"SPLUNK_PASSWORD": "your-password",

"SPLUNK_SCHEME": "https",

"VERIFY_SSL": false,

"FASTMCP_LOG_LEVEL": "INFO"

}

}

}

{

"arcanna-input-mcp": {

"command": "/opt/venv/bin/arcanna-mcp-input-server",

"args": [],

"env": {

"ARCANNA_INPUT_API_KEY": "your-input-api-key",

"ARCANNA_HOST": "your-arcanna-host",

"ARCANNA_USER": "your-username"

}

}

}

{

"slack-mcp": {

"command": "/usr/local/bin/mcp-server-slack",

"args": [],

"env": {

"SLACK_BOT_TOKEN": "your-slack-bot-token",

"SLACK_TEAM_ID": "your-team-id"

}

}

}

{

"openai-mcp": {

"command": "/usr/local/bin/mcp-openai",

"args": [],

"env": {

"OPENAI_API_KEY": "your-openai-api-key"

}

}

}

{

"sequential-thinking": {

"command": "/usr/local/bin/mcp-server-sequential-thinking",

"args": []

}

}

Remote MCP Servers Configuration:

Before using this feature, ensure that the specified MCP server implements Streamable HTTP or Server-Sent Events (SSE). If so, add it to the configuration as follows:

{"sse_mcp_server": {"url": "http://sse_enabled_mcp_server_address:port/path"}}

Variables

The Variables tab allows you to define environment variables that can be reused across both MCP and Assistant configurations using Jinja template syntax (e.g., {{ variable_name }}).

Usage

- Click "Add variable" to create a new variable

- Set the variable name and value

- Reference the variable in your configurations using

{{ VARIABLE_NAME }}

Example

Define a variable:

- Variable Name:

ELASTICSEARCH_HOSTS - Variable Value:

http://localhost:9200

Use in MCP Config:

{

"elasticsearch-mcp-server": {

"env": {

"ELASTICSEARCH_HOSTS": "{{ ELASTICSEARCH_HOSTS }}"

}

}

}