Feedback & Training

The next phase of the learning process for the AI Use Case is called Feedback and Training. Please refer to Concepts -> Feedback and Training section for additional information on the concepts.

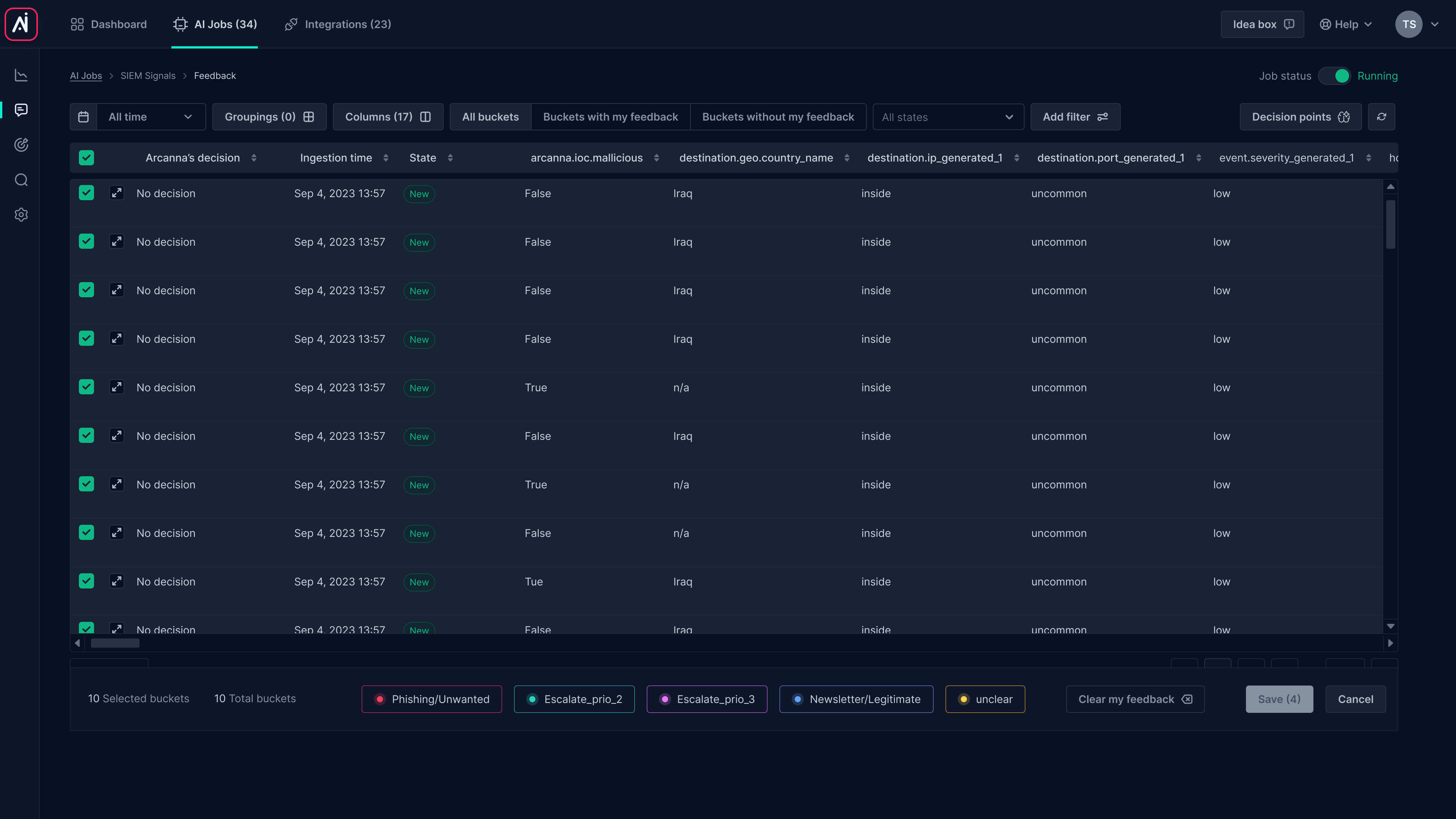

Bulk feedback

Bulk feedback means giving feedback on multiple buckets at the same time. To do so, there are 2 steps that need to be followed:

- Click on the AI Use Case you want to provide Feedback for and go to the Feedback Page. Select the buckets you want to provide feedback for by using the checkbox in front of each bucket) and, using the buttons corresponding to the classes defined for the use case at the bottom of the screen, choose the one corresponding to your decision.

-

Once you selected the class, click on the Save button

infoThe feedback process can be repeated over and over without any limitations on time or the number of alerts. All the feedback provided within a session is stored and will be incorporated in the AI models once the training process is triggered.

Single bucket feedback

Another option is to give feedback on individual buckets. To do so, you need to expand the bucket and select at the bottom of the page the decision you consider to be right for it. Once a training session takes place, Arcanna will also offer its decision. To confirm or change it, click on the class.

In the expanded bucket view, you can also see the decision breakdown, which includes details on Consensus and Arcanna’s decisions over time for these types of events. For more insight into why Arcanna made a particular decision, you can check the AI explainability page, where a comparison with other events that received feedback can help users understand the outcome.

Once you've labeled sufficient buckets, go to the Use case overview page from the left menu:

Click on the Train now button to start the training process. This is when all the feedback provided within the session is going to be used as training information for the models, together with the existing knowledge provided in past feedback sessions.

Please refer to Concepts -> Feedback and Training section for additional information about Feedback and Training concepts.

Use case overview

The use case overview dashboard provides useful information for the training of the models and their performance. Based on it, you can decide when to train the models again.

-

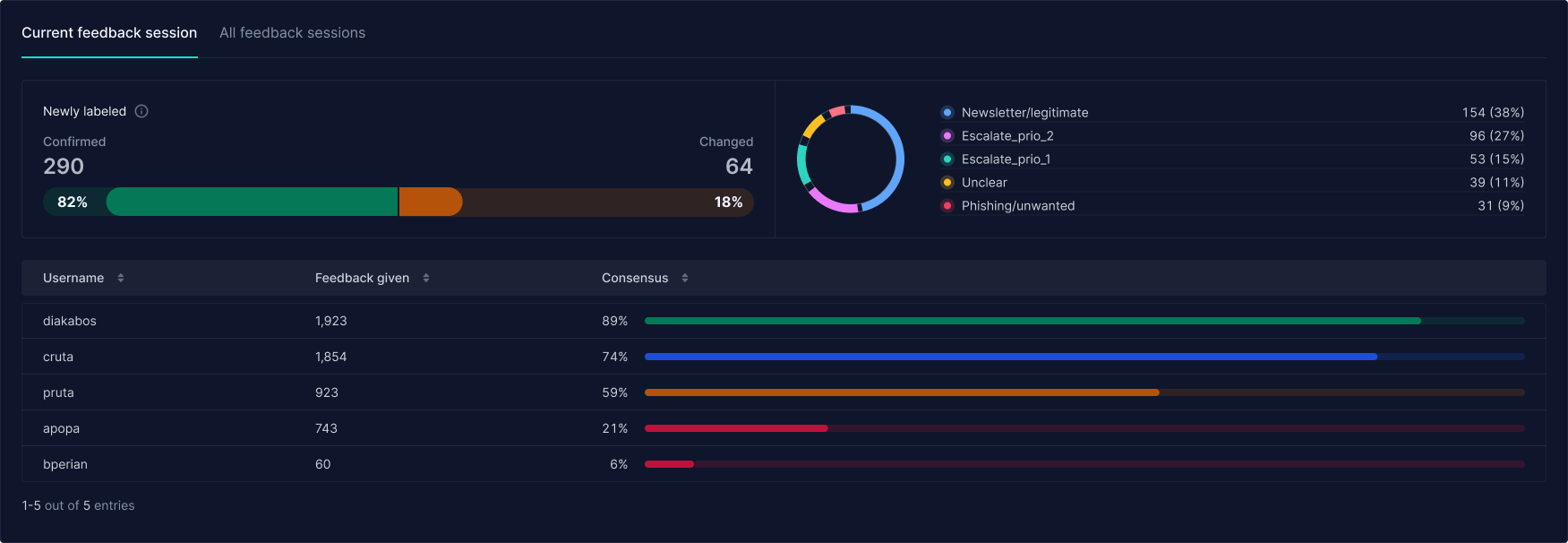

Current feedback session - displays metrics related to the current feedback session: how many buckets were provided feedback for, how the model prediction was changed or confirmed, the level of consensus, with a breakdown per analyst - who provided feedback

-

All feedback sessions - provides the same metrics cumulated for the entire lifecycle of the AI Use Case:

Use case summary

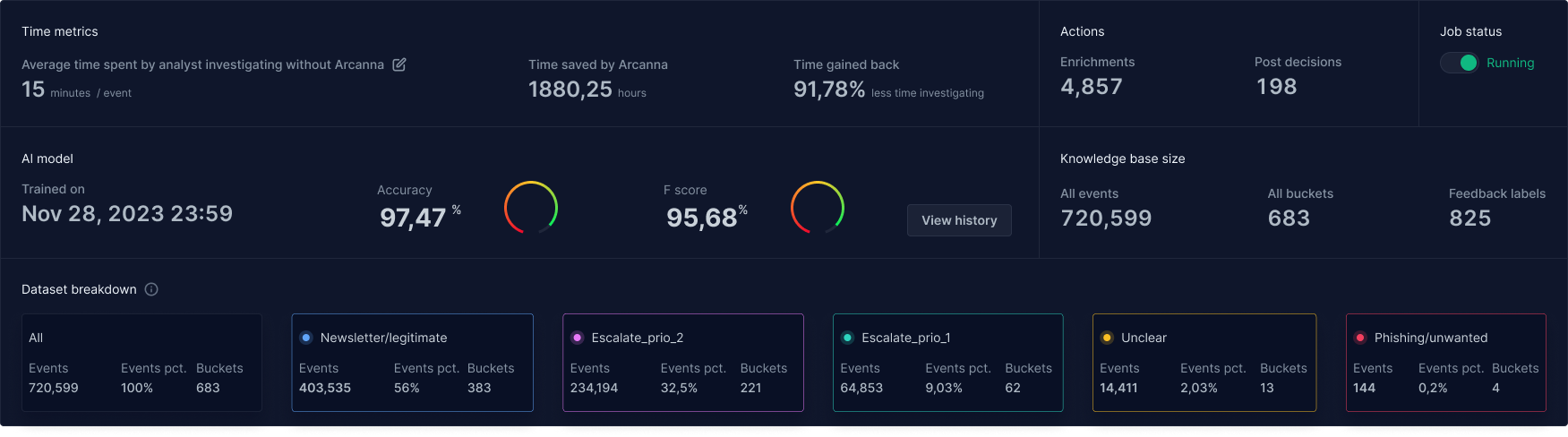

Use case summary is a dedicated Dashboard that provides you with metrics related to the AI model performance and predicted outputs.

It shows overall time saved metrics, changed versus confirmed classes, performance metrics such as accuracy and F-score, together with a model history view where you can see all the training rounds and the performance each model had. Moreover, you can download the data and the models, or rollback to a previous model version.

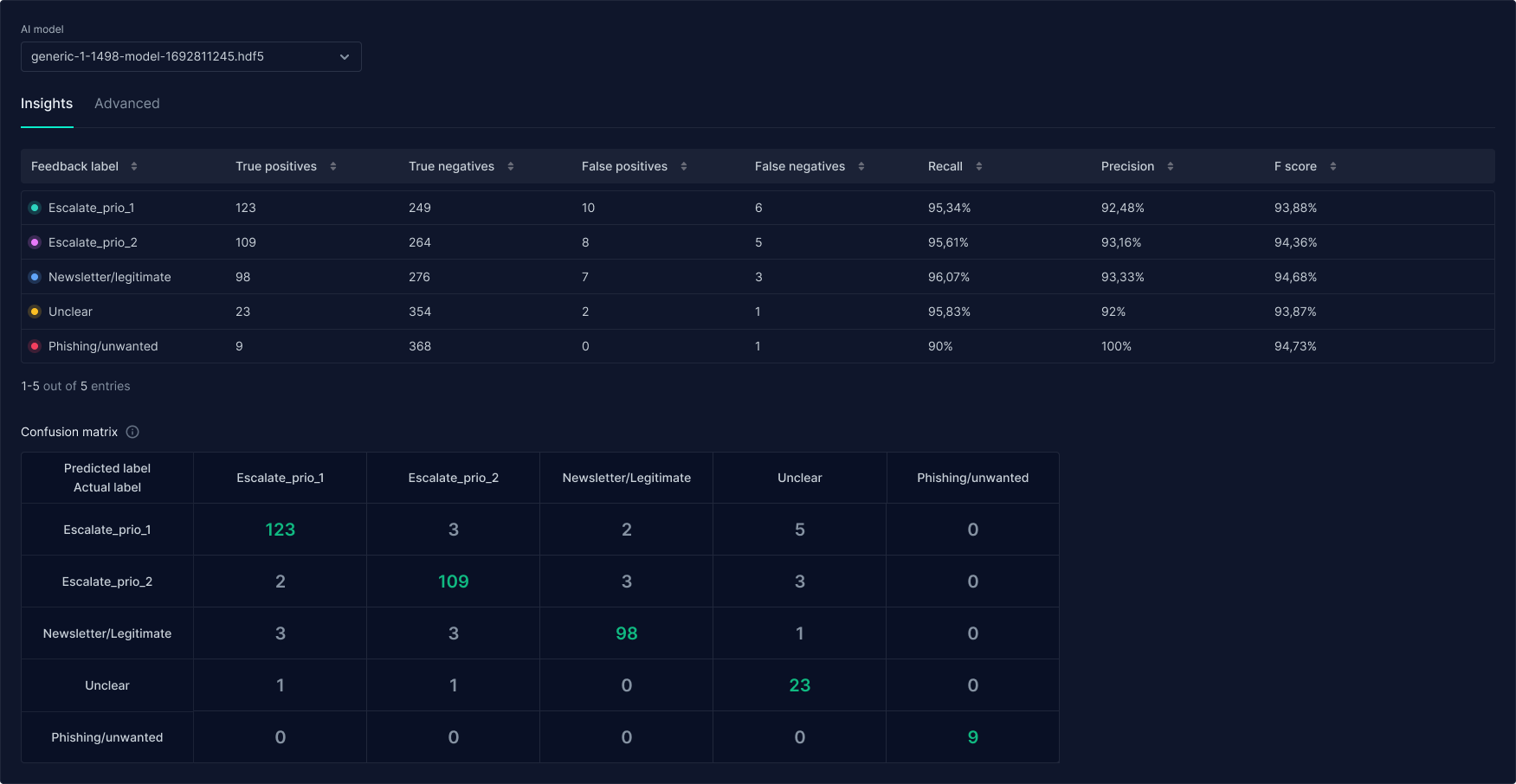

Performance metrics

The performance metrics can be viewed by model version and reflect how well the models learnt how to predict the classes you defined. Standard metrics will include precision, recall, F-score per class, together with the number of true positives, true negatives, false positives and false negatives.

It also displays the confusion matrix, which reveals how many times the model was right in its decision, and, when wrong, which classes it confused.

Advanced metrics include information such as the ROC curve and data distribution throughout the dataset (values breakdown per decision point and frequency in the dataset).